Based on the breathless assessment of some commentators, ChatGPT is poised to replace contact centers in every sphere of business – including the armies of agents currently employed in the insurance industry worldwide. Even the World Economic Forum predicts

Really?

Look,

Awkward assistants

In 2011, the IBM Watson supercomputer scooped up a $1 million prize on the American TV show "Jeopardy!" besting champions Brad Rutter and Ken Jennings. IBM donated the winnings to charity. The two men would go on to win future games. Watson "has been reduced to a historical footnote," The Atlantic reported in a May 5, 2023, essay,

By 2014,

Incorrect answers

For insurers, these technologies are just not good enough today to be significantly useful. When claimants reach out to insurers, they want information that will advise them on major life decisions. Finding out later that the information was incorrect – or incomplete – is a big problem. Bad enough if the human agent gave the wrong information; possibly more legally hazardous if the chatbot gets it wrong.

Alexa, Google Assistant, Siri, ChatGPT, and others in the chatbot family are good enough to help people create drafts, but they're not good enough for insurance. If COVID taught us nothing else, it was that humans need humans. People need to talk to brokers, to insurers, to agents who are product specialists and can provide advice with empathy.

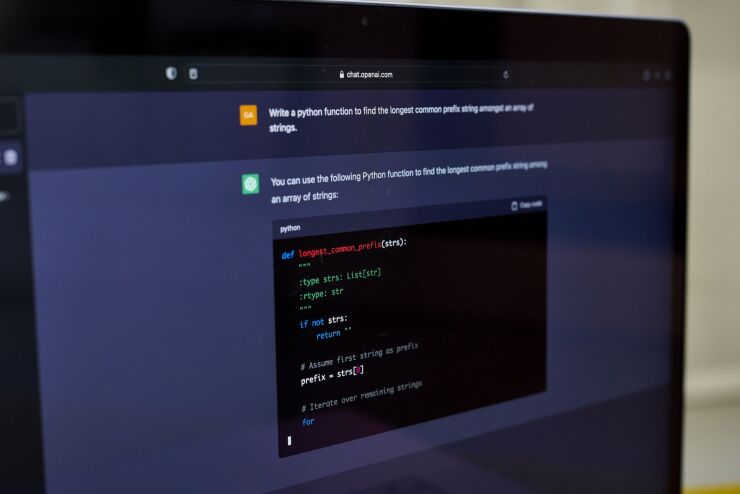

One alarming aspect of ChatGPT – hallucinations, or factual errors – should give everyone cause for concern. Contact center agents are expected to not have factual errors. Yesterday's AI contact center might have had hallucinations, but most of the time, they were easily spotted. Today's ChatGPT cannot only be wrong, but it will create fake information and pass it off as accurate. Unless the audience are subject-matter experts, customers will believe ChatGPT's convincing argument.

How did ChatGPT get these persuasion skills, to make both good and bad information look alike? It was trained on the internet, an impressive source of information and disinformation.

Exclusive insurance solution

"Wait!" comes the cry from the insurance world. "All we need is a ChatGPT that understands our own insurer information and ignores the rest of the internet!" One would think that would address the problem — but think again. True, there are people who are working to give ChatGPT specific information in certain topics against which it can answer questions.

The trouble is, while this additional training data increases ChatGPT's knowledge, it doesn't turn off the original data that ChatGPT acquired in its initial training from internet sources.

I have found that ChatGPT can work well when all you need is a single answer to a question, but the chatbot is prone to simplification if there are multiple answers. For example, it can tell you where the Taj Mahal is, and even throw in some interesting fun facts. But when I asked ChatGPT to digest a policy document, it confused coverage levels and restrictions on different benefits. It tends to see the document as a single set of facts, selecting the first one that seems to match the question. It doesn't notice when there are multiple answers that are different, depending on the product, coverage, state, or other factors.

Google's

Government guardrails

Microsoft, an OpenAI investor, is rolling out ChatGPT into its products (as

Meanwhile, as governments and

Government policies aside, here's one suggestion: could the industry come together to train an LLM on trustworthy industry sources, such as the training material we use to bring new professionals into the industry? With enough reliable material, an insurance LLM could pass insurance exams. That would be a huge first step.

We are certain LLMs could revolutionize how insurers communicate with customers and business partners. Whether that happens tomorrow – or in three years' time or 30 years' time – is another question. As most readers know, these technologies move quickly, until they hit a wall, and then they can stall for years or decades.

The pace of LLM innovation is impressive. Whether ChatGPT pans out for insurers, and for the industry as a whole, depends on timing, some luck, and calculated decisions on what type of strategy each company chooses to pursue. More likely, the wider LLM landscape will yield opportunities to change the game over time.