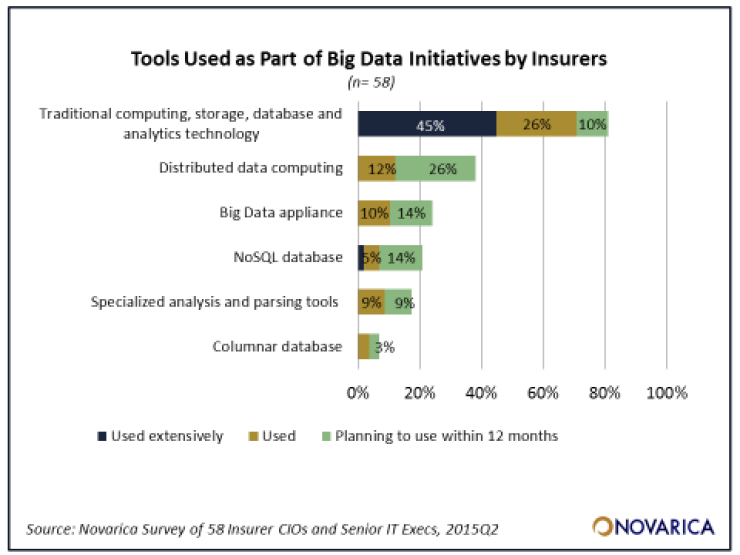

With the recent publication of Novarica’s Analytics and Big Data at Insurers report, it’s time to take an honest look at the state of big data in the industry. One of the most tellingand disappointingcharts showed that of the insurers working with big data, seventy percent were using traditional computing, storage, database, and analytics technology, meaning they’re working with SQL databases in their existing environment. Of all the other technology options (Hadoop, NoSQL, columnar databases, etc) only a small percentage of insurers use them and almost no insurer uses them extensively.

Compare that to the percentage of insurers who say they are using big data sources, which is significantly higher than the percentage of insurers using big data technology. This includes third-party consumer or business data, geospatial data, weather data at the top the list, with audio/video data, telematics, social-media content, and internet clickstreams lagging behind. But what’s really happening when those big data sources are loaded into a traditional structured database? Most likely, a few key elements are pulled from the data and the rest is ignored, allowing the so-called big data to be treated like “small data,” processed along with all the policy, claims, and customer data already being stored. For example, while a weather feed might be coming with minute-by-minute updates, most insurers are probably just pulling region condition along with daily temperature highs and lows, filtering it down to a subset that stores easily. While I’m not saying such reduced data doesn’t augment an insurer’s understanding of incoming claims (for example), it’s far from what we think about when we imagine how a big data analysis of the weather might impact our understanding of insurance claim patterns.

There’s no denying that there are a few exciting use cases of insurers truly leveraging big data, but it’s still extremely limited. The industry as a whole is getting much better at data analysis and predictive modeling in general, where the business benefits are very clear. But the use cases for true big data analysis are still ambiguous at best. Part of the allure of big data is that insurers will be able to discover new trends and new risk patterns when those sources are fully leveraged, but “new discoveries” is another way of saying “we’re not yet sure what those discoveries will be.” And for many insurers, that’s not a compelling enough rationale for investing in an unfamiliar area.

And that investment is the second problem. The biggest insurers may have the budget to hire and train IT staff to work on building out a Hadoop cluster or set up several MongoDB servers, but small to mid-size insurers are already stretched to their limits. Even insurers who dedicate a portion of IT budgets to innovation and exploration are focusing on more reliable areas.

What this means is that insurersno matter how many surveys show they anticipate its adoptionwill likely not see a significant increase in big data tech. However, that doesn’t mean the industry will let big data pass it by. Instead, much of the technology innovation around big data will need to come from the vendor community.

We’re already seeing a growing number of third-party vendors that provide tools and tech to do better analysis and get deeper understanding from big data, a second-generation of big data startups. Most of these vendors, however, expect that the insurer will already have a Hadoop cluster or big data servers in place, and (as we know) that’s exceedingly rare. Instead, vendors need to start thinking about offering insurers a “big data in a box” approach. This could means SaaS options that host big data in the cloud, appliances that offer both the analysis and the infrastructure, or even just a mix of professional services and software sales to build and manage the insurer’s big data environment on which the licensed software will run.

We’ll also begin to see insurance core system vendors begin to incorporate these technologies into their own offerings. The same thing has happened for traditional data analytics, with many top policy admin vendors acquiring or integrating with business intelligence and analysis tools. Eventually they’ll take a similar approach to big data.

And finally, some third-party vendors will move the entire big data process outside of the insurers entirely, instead selling them access to the results of the analysis. We’re already seeing vendors like Verisk and LexisNexis utilize their cross-industry databases to take on more and more of the task of risk and pricing assessment. Lookups like driver ratings, property risk, and experience-based underwriting scores will become as common as credit checks and vehicle history. These third-party players will be in a better position to gather and augment their existing business with big data sources, leveraging industry-wide information and not just a single book of business. This mean that smaller insurers can skip building out their own big data approach and instead get the benefits without the technology investment, and they can compete against bigger players even if their own store of data is relatively limited.

So while the numbers in Novarica’s latest survey may look low and the year-on-year trend may show slow growth, that doesn’t mean big data won’t transform the insurance industry. It just means that transformative change will come from many different directions.

This blog entry has been republished with permission.

Readers are encouraged to respond using the “Add Your Comments” box below.

The opinions posted in this blog do not necessarily reflect those of Insurance Networking News or SourceMedia.